Basic & Advanced Application

Spark and Pyspark are available via the Jupyter + Spark Basic and Jupyter + Spark Advanced interactive applications on Open OnDemand for Armis2, Great Lakes, and Lighthouse.

The Basic application provides a starter Spark cluster of 16 cpu cores and 90 GB of RAM with a one day (24 hour) walltime limit designed for beginner Spark users. This is especially useful for python novices who have not spent time customizing their environment, as well as for newcomers to the Spark ecosystem.

The Advanced application provides a more comprehensive and configurable interface for advanced Spark applications. Here, a user can ask for more cores and memory than the default, but understanding that Spark has certain requirements for its software executors (namely 3 CPU cores and 15 GB or RAM per executor). Users can also load module files or source a setup file where shell variables can be defined. Note that modules must be compatible with the selected Spark and Python versions.

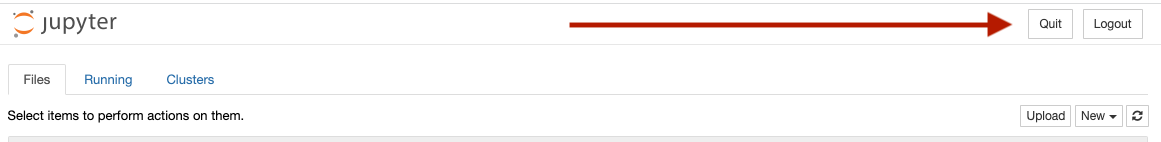

Note that closing the browser tab of the Jupyter Server or Jupyter Notebook DOES NOT stop the Spark cluster that runs in the background. Your account will continue to accrue charges until you explicitly stop the job or the wall time expires. To stop the job, click the ‘Quit’ button in the upper right of the Jupyter Server web page.